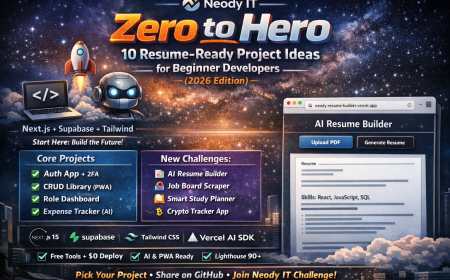

AI & ML Roadmap 2026: Beginner to Pro in 6 Months

Master AI & Machine Learning in 2026! Free beginner roadmap: Python, projects, deployment. From zero to pro jobs in 6 months. Neody IT + Lofer.tech guide. Start today!

Hey future AI builder!

In 2026, AI/ML jobs exploded 75% (LinkedIn) - but 90% beginners quit from overwhelm. Not you. This complete 10-step roadmap from Neody IT takes you from zero coding to deployed models and freelance gigs in 6 months.

Lofer.tech Insta squad + Neody IT readers: Python basics → real projects → $50/hr jobs. No degree needed. 15 min/day.

Ready? Dive in - your first model awaits.

Introduction: Why AI & Machine Learning is the Skill of the Future

Picture this: A few years back, I was scrolling through job listings, feeling stuck in a dead-end gig. Then boom - AI popped up everywhere. Netflix recommending your next binge-watch, your phone's voice assistant cracking jokes, even doctors spotting diseases faster than ever. That's not sci-fi; that's AI and Machine Learning (ML) changing the game right now. If you're like me back then, wondering if it's time to jump in, stick around. This 2026 guide from Neody IT (and a quick shoutout to our Lofer.tech fam on Insta) is your no-BS roadmap to getting started.

The Rise of AI in Industry

AI isn't some distant dream anymore - it's exploding across every corner of work and life. Companies like Google, Amazon, and even small startups are pouring billions into it. Remember ChatGPT blowing up in 2023? That was just the spark. Fast-forward to 2026, and AI's handling everything from self-driving cars to personalized shopping.

-

Job boom: LinkedIn reports AI/ML roles jumped 74% year-over-year in 2025 alone. Fields like healthcare (predicting outbreaks), finance (spotting fraud), and entertainment (generating music) are all in.

-

Everyday wins: Think Spotify's playlists or Uber's route magic - that's ML crunching data to make smart guesses.

-

Big players leading: Tesla's autonomous driving, IBM's Watson in hospitals - it's creating million-dollar industries overnight.

The best part? You don't need a PhD to join. Industries are desperate for fresh talent who can build real stuff.

Hype vs. Real Skills: Cutting Through the Noise

Social media's full of "AI will take all jobs!" panic or "Millionaire by mastering one prompt!" fluff. Lofer.tech followers, you've seen those Reels - hype city. But here's the truth: AI/ML is 10% buzz, 90% practical skills that pay off big.

-

Hype: Flashy demos like deepfakes or robot artists steal the show, but they distract from the grind.

-

Real skills: It's about teaching computers patterns - like training a dog with treats (data). Core stuff: coding basics, data handling, and simple models that solve actual problems.

-

Proof in numbers: World Economic Forum says 97 million new AI jobs by 2027, but only if you learn the hands-on tools, not just watch tutorials.

Skip the hype; focus on building. That's how Neody IT readers turn curiosity into careers.

Who Should Learn AI/ML?

Anyone with a laptop and curiosity! No gatekeeping here. If you're:

-

A student eyeing tech jobs.

-

A career-switcher tired of the 9-5 grind.

-

A marketer, designer, or teacher wanting an edge (AI automates boring tasks so you shine).

-

Heck, even hobbyists - build your own chatbot for fun.

Lofer.tech crew, if you're into quick hacks on Insta, this is your glow-up. By 2026, AI literacy is like knowing Excel today - everyone needs it.

Common Beginner Mistakes (And How to Dodge Them)

I've been there - wasted weeks on wrong paths. Don't repeat these:

-

Jumping into advanced stuff too soon: Skip the 500-page books; start with Python basics.

-

Ignoring math fears: You need high-school algebra, not rocket science. Tools like Google Colab handle the heavy lifting.

-

Chasing every trend: Focus on fundamentals first - hype tools fade fast.

-

Not building projects: Theory alone flops. Make a simple predictor (like movie ratings) to stick it.

Nail these, and you're golden. Ready to dive deeper? Let's roadmap the rest.

Understanding What AI and Machine Learning Actually Mean

Okay, let's back up a sec. Before we geek out on code, imagine AI as your super-smart buddy who learns from experience. I remember my first "aha" moment: My phone guessed my next word in a text. Magic? Nope - just smart tech. Neody IT readers and Lofer.tech squad, this section breaks it down like peeling an onion, layer by layer. No jargon overload, promise.

Artificial Intelligence vs Machine Learning vs Deep Learning

These terms get tossed around like confetti, but they're family, not strangers.

-

Artificial Intelligence (AI): The big umbrella. It's any tech mimicking human smarts - like Siri chatting or a chess bot crushing grandmasters. Goal: Make machines "think."

-

Machine Learning (ML): AI's workhorse. Instead of hard-coding every rule, ML lets computers learn from data. Feed it examples, and it spots patterns. Think: Teaching a kid colors by showing pics, not lectures.

-

Deep Learning (DL): ML on steroids. Uses "neural networks" (brain-inspired layers) for mega-complex stuff like image recognition. Powers your Instagram filters or self-driving cars.

Simple rule: AI is the dream, ML is the method, DL is the muscle. Most jobs start with ML basics.

Real-World Examples That'll Blow Your Mind

Theory's cool, but seeing it live? Game-changer. Here's AI/ML in action today:

-

Netflix/Spotify: ML analyzes your likes to suggest bangers. (Supervised learning magic.)

-

Facial recognition: Unlock your phone or tag friends on Facebook - DL spots your mug in milliseconds.

-

Amazon recommendations: "Customers also bought..." That's unsupervised clustering your shopping habits.

-

Healthcare heroes: ML predicts heart attacks from scans, saving lives faster than docs alone.

-

Games like AlphaGo: Reinforcement learning beat world champs at Go - pure trial-and-error smarts.

Lofer.tech peeps, next time you swipe right on a Reel, thank ML for the algo hooking you up.

Types of Machine Learning

ML comes in three flavors, like ice cream scoops. Pick based on your data and goal. Here's the breakdown:

Supervised Learning

Like a teacher with answer keys. You give labeled data (input + correct output), and it learns to predict.

-

Examples: Spam email filters (label: spam/not spam), house price predictors (size → price).

-

Best for: When you have tons of tagged examples. Easiest starter type!

Unsupervised Learning

No labels? No problem. It finds hidden patterns on its own, like sorting laundry without instructions.

-

Examples: Customer groups for marketing (group similar shoppers), anomaly detection (spot fraud).

-

Best for: Exploring messy data, discovering surprises.

Reinforcement Learning

Trial-and-error style, like training a puppy with treats. Agent tries actions, gets rewards/penalties, improves over time.

-

Examples: Robot vacuums navigating rooms, stock trading bots maximizing profits.

-

Best for: Games, robotics - where decisions build on past wins/losses.

Master these, and you're decoding 80% of real AI projects. Lofer.tech, imagine building your own recommendation bot for Insta stories!

Step 1 - Build Strong Programming Foundations (Python First)

Alright, roadmap time! Step 1 feels like learning to walk before running a marathon. I bombed my first AI project because I skipped coding basics - don't be me. Python's your trusty bike here: simple, powerful, and everywhere in AI. Neody IT crew and Lofer.tech followers, spend 2-4 weeks here, and you'll code like a pro. Let's roll.

Why Python Dominates AI

Flashback to 2018: I tried Java for ML - clunky nightmare. Switched to Python? Instant love. By 2026, it's king (over 80% of AI pros use it, per Stack Overflow surveys).

-

Beginner-friendly: Reads like English.

print("Hello, AI world!")vs. pages of boilerplate. -

AI powerhouse: Built for data science. Google, Meta, OpenAI all swear by it.

-

Huge community: Free tutorials galore - Kaggle, YouTube, fast fixes for "stuck" moments.

-

Future-proof: Powers 2026 hits like Grok AI and Stable Diffusion.

No Python? No AI party. It's the gateway drug.

Core Programming Concepts Beginners Must Know

Don't memorize; understand these building blocks. Practice with mini-challenges, like a tip calculator app.

-

Variables & Data Types: Boxes for info - numbers (

age = 25), text (name = "Alex"), lists (fruits = ["apple", "banana"]). -

Loops & Conditionals: Repeat stuff (

forloops) or decide (if age > 18:). -

Functions: Reusable code chunks. Like a recipe you call anytime:

def greet(name): return f"Hi, {name}!". -

Lists, Dictionaries, & Pandas basics: Handle data like a spreadsheet pro.

Pro tip: Code daily 30 mins on Codecademy or freeCodeCamp. Muscle memory kicks in fast.

Essential Python Libraries Overview

Libraries are pre-built tools - why reinvent the wheel? Install with pip (Python's app store). Here's your starter pack:

-

NumPy: Math magic for arrays. Speed up calculations like

array + 5. -

Pandas: Data wrangling. Load CSVs, clean mess, analyze like Excel on steroids.

-

Matplotlib/Seaborn: Charts & graphs. Visualize data to spot trends.

-

Scikit-learn: ML starter kit. Build models without headaches.

Lofer.tech squad, these turn raw data into Insta-worthy insights.

Development Environment Setup

Zero to hero in 10 minutes. My go-to for 2026:

-

Install Python: Grab from python.org (version 3.11+). Check with

python --versionin terminal. -

Code Editor: VS Code (free, Microsoft). Extensions: Python, Jupyter.

-

Jupyter Notebooks:

pip install notebook. Run code in chunks - perfect for experimenting. -

Google Colab: Free cloud version. No setup! colab.research.google.com - ideal for Lofer.tech mobile coders.

-

Anaconda (optional): All-in-one bundle for libraries. Great for Windows newbies.

Test run: Open Colab, type print("I'm AI-ready!"). Boom - you're set.

Nailed Step 1? You're not a beginner anymore. Pat yourself on the back, then let's hit Step 2.

Step 2 - Learn the Essential Math (Without Overwhelm)

Math giving you flashbacks to school nightmares? Breathe - AI math is way simpler than it sounds. I used to skip calc class, but grasped ML basics with just high-school refreshers. Think of it as gym weights for your brain: light lifts build strength for heavy models. Neody IT folks and Lofer.tech crew, aim for 3-5 weeks here. No proofs, just "why it matters" vibes. Tools like Khan Academy or 3Blue1Brown videos make it fun.

Linear Algebra Basics

Vectors and matrices? Sounds fancy, but it's just organizing numbers like a shopping list.

-

Vectors: Arrows with direction/magnitude. Example: Your location [x=5, y=3] on a map. ML uses them for data points.

-

Matrices: Grids of numbers. Like a spreadsheet row of customer ages/salaries. Add/multiply them to transform data.

-

Why for AI?: Powers image pixels or recommendation engines. Python's NumPy handles it:

vector1 + vector2.

Intuition: Rotate a photo? Matrix math twists pixels. Start with: Draw vectors on paper.

Probability Fundamentals

Life's uncertain - ML loves that. Probability guesses "what's likely next?"

-

Basics: Coin flip (50% heads). Events: Independent (dice rolls) vs. dependent (cards without replacement).

-

Bayes' Theorem (simple): Update beliefs with new info. "Given rain, what's puddle probability?" Spam filters use this.

-

Distributions: Normal (bell curve for heights), like most data clusters around average.

Why care? ML predicts uncertain stuff, like "Will this email be spam?" Practice: Simulate coin flips in Python.

Statistics for ML

Stats turns data chaos into insights. No PhD needed - just these:

-

Mean/Median/Mode: Averages. Mean salary? Sum divided by count.

-

Variance/Standard Deviation: Spread. High variance? Wild data (stock prices).

-

Correlation: Do two things move together? (Ice cream sales vs. hot days: yes.)

-

Hypothesis Testing: "Is this real or luck?" P-values check if your model's guesses rock.

Real talk: Pandas summarizes this in one line. Example: Analyze your Netflix watch history for patterns.

Basic Calculus Intuition

Calculus? Slope of curves. Skip derivatives if overwhelmed - focus on "change."

-

Gradients: Steepest uphill path. ML "descends" them to minimize errors (like gradient descent in training).

-

Why intuitive?: Imagine rolling a ball downhill to the lowest point - that's optimizing a model.

-

No formulas needed yet: Tools auto-compute. Visualize: 3Blue1Brown's "Essence of Calculus" series (10-min wins).

Lofer.tech tip: Plot a curve in Matplotlib, tweak slopes - see ML optimization live.

Math done right feels empowering, not exhausting. Quiz yourself: Explain vectors to a friend. Ready for data? Step 3 awaits!

Step 3 - Data Handling and Analysis (The Real Core Skill)

Ever ordered pizza data and got a soggy mess? That's raw datasets - 80% of AI work is cleaning them up. My first project flopped because I ignored this; now it's my secret weapon. Lofer.tech fam, think Insta analytics; Neody IT readers, enterprise goldmines. Spend 4-6 weeks here - it's the unglamorous grind that makes models shine. Python's NumPy/Pandas are your janitors.

Data Cleaning and Preprocessing

Garbage in, garbage out. Prep data like prepping ingredients for biryani.

-

Handle missing values: Drop 'em, fill with averages (

df.fillna(df.mean())), or predict. -

Outliers: Spot weirdos (e.g., 999-year-old in age column) with box plots; remove or fix.

-

Encoding: Turn text to numbers - "red/blue/green" → 0/1/2 (

pd.get_dummies()). -

Scaling: Make features comparable (ages 20-80, income 0-1M? Normalize to 0-1 range).

Pro move: Always explore first (df.describe()). Clean code example:

import pandas as pd

df = pd.read_csv('data.csv')

df.dropna(inplace=True) # Bye, blanks!

Working with NumPy and Pandas

NumPy: Fast arrays. Pandas: Excel 2.0 for tables (DataFrames).

-

NumPy:

import numpy as np; arrays likearr = np.array([1,2,3]); math opsarr * 2. -

Pandas:

import pandas as pd; loaddf = pd.read_csv('file.csv'); slicedf.head(10); filterdf[df['age'] > 30]. -

Combo power: Pandas for loading, NumPy for speedy calcs.

Practice: Download Titanic dataset from Kaggle, slice survivors by class.

Data Visualization Basics

See to believe. Plots reveal secrets numbers hide.

-

Matplotlib: Basics -

plt.plot(x, y); plt.show(). Lines, bars. -

Seaborn: Prettier -

sns.heatmap(df.corr())for relationships. -

Key charts: Scatter (correlations), histograms (distributions), boxplots (outliers).

Lofer.tech hack: Visualize your follower growth - spot peak post times!

Example:

import matplotlib.pyplot as plt

plt.hist(df['age'], bins=20)

plt.show()

Understanding Datasets

Data's the fuel - know your types.

-

Structured: Tables (CSV, SQL) - ages, prices. Most ML starts here.

-

Unstructured: Images, text, audio - fancy DL territory later.

-

Public gems: Kaggle (Titanic, Iris), UCI ML Repo. Start small: 1000 rows.

-

Ethics check: Bias? (e.g., dataset all men → skewed hiring AI). Always question sources.

By Step 3's end, wrangle data like a boss. Your model's accuracy? Skyrockets. Next: ML models await!

Step 4 - Core Machine Learning Concepts

Data's prepped? Time to teach computers tricks! My first ML model predicted house prices - nailed it after epic fails. This is where theory meets fun. Lofer.tech squad, imagine classifying Insta comments; Neody IT pros, business predictors. 4-6 weeks of hands-on: Load data, train, tweak. Scikit-learn's your playground - no PhDs required.

Supervised vs Unsupervised Models

Labeled teacher vs. solo explorer.

-

Supervised: Data has answers (input: house size, output: price). Predicts future labels.

-

Unsupervised: No labels. Finds patterns/groups automatically.

-

Quick pick: Labels available? Supervised. Exploring? Unsupervised.

Most beginner wins: Supervised. Like 70% of jobs.

Regression Algorithms

Predict numbers (continuous outputs). "How much?"

-

Linear Regression: Straight line fit. House price = slope * size + intercept. Simple, interpretable.

-

Decision Trees/Random Forest: Tree of yes/no questions. Handles curves better.

-

Example: Predict salary from experience. Code:

from sklearn.linear_model import LinearRegression model = LinearRegression() model.fit(X_train, y_train) # X=features, y=prices predictions = model.predict(X_test)

Real-world: Stock prices, sales forecasts.

Classification Algorithms

Predict categories (discrete outputs). "Which one?"

-

Logistic Regression: Sigmoid curve for yes/no (e.g., spam/not).

-

K-Nearest Neighbors (KNN): "You're like your 5 closest neighbors."

-

Support Vector Machines (SVM): Finds the best dividing line.

-

Example: Iris flower species. Code snippet:

from sklearn.ensemble import RandomForestClassifier clf = RandomForestClassifier() clf.fit(X_train, y_train) # y=species labels

Apps: Email spam, disease diagnosis, customer churn.

Clustering Basics

Unsupervised grouping. "Who's similar?"

-

K-Means: Pick K clusters, assign points to nearest center, repeat.

-

Example: Group shoppers (budget + buys) for targeted ads.

-

Code:

from sklearn.cluster import KMeans kmeans = KMeans(n_clusters=3) clusters = kmeans.fit_predict(df[['feature1', 'feature2']])

Introduction to Scikit-Learn

The ML Swiss Army knife. pip install scikit-learn.

-

Pipeline: Load → Split (train/test) → Fit → Predict → Evaluate (accuracy score).

-

Full flow:

from sklearn.model_selection import train_test_split from sklearn.metrics import accuracy_score X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2) # Train model (as above) print(accuracy_score(y_test, predictions))

Evaluate: Accuracy, precision (for imbalanced data). Overfitting? Cross-validate.

Practice on Iris/Titanic datasets - hit 85% accuracy fast. You're building real ML now!

Step 5 - Model Evaluation and Improvement

Your model's predictions are in - now the real test: Does it actually work? I once built a "perfect" spam detector that bombed on new emails (classic flop). This step's like grading your work. Lofer.tech creators, tune for audience predictions; Neody IT pros, deploy reliable forecasters. 2-4 weeks: Test, tweak, triumph.

Training vs Testing Data

Never trust a model that memorizes answers.

-

Training data: 80% - teaches the model patterns.

-

Testing data: 20% - hidden set to check real-world performance. Split with

train_test_split. -

Why?: Simulates future unknown data. No peeking!

Rule: Train on one pile, test on fresh. Prevents cheating.

Overfitting and Underfitting

Goldilocks zone: Not too tight, not too loose.

-

Overfitting: Model memorizes training data (99% accurate there, 60% on test). Like cramming for one test.

-

Underfitting: Too simple, misses patterns (60% everywhere). Lazy learner.

-

Fixes: More data, simpler models, regularization (penalizes complexity).

Visual: Plot learning curves - training error low, test high? Overfit alert.

Performance Metrics

Accuracy's a start, but pick the right yardstick.

-

Regression: Mean Squared Error (MSE) - average prediction error squared. Lower = better.

-

Classification: Accuracy (correct %), Precision (of positives, how many true?), Recall (of true positives, caught 'em all?), F1 (precision + recall balance).

Code:

from sklearn.metrics import accuracy_score, classification_report

print(accuracy_score(y_test, y_pred))

print(classification_report(y_test, y_pred))

Imbalanced data (99% no-spam)? Use F1 or ROC-AUC.

Cross-Validation

One test split? Risky. Rotate like exam practice tests.

-

K-Fold: Split into K parts (e.g., 5), train on 4, test on 1, average. Robust score.

Code:

from sklearn.model_selection import cross_val_score

scores = cross_val_score(model, X, y, cv=5)

print(scores.mean()) # Reliable average

Lofer.tech tip: CV your content classifier for steady Insta insights.

Hyperparameter Tuning

Dial in perfection - like tuning a guitar.

-

Grid Search: Try all combos (e.g., tree depth 3-10).

-

Random Search: Sample smartly - faster.

-

Code:

from sklearn.model_selection import GridSearchCV

param_grid = {'n_estimators': [50, 100]}

grid = GridSearchCV(RandomForestClassifier(), param_grid, cv=5)

grid.fit(X_train, y_train)

print(grid.best_params_)

Start manual, scale to tools like Optuna later.

Master this, your models go from meh to money-makers. Celebrate with a project share on Lofer.tech!

Step 6 - Feature Engineering (The Hidden Superpower)

Models are only as good as their ingredients. Feature engineering's like jazzing up a basic recipe into gourmet - turns okay accuracy into wow. I boosted a meh predictor 20% just by tweaking inputs. Underrated magic! Lofer.tech creators, engineer for viral post features; Neody IT squad, enterprise edges. 3-5 weeks: Experiment wildly.

Encoding Categorical Data

Text/categories → numbers machines love.

-

One-Hot Encoding: Colors "red/blue" → /. No order assumed.

pd.get_dummies(). -

Label Encoding: Ordinal like "low/medium/high" → 0/1/2.

-

Target Encoding: Rare - for high-cardinality (many categories), average target per category.

Code win:

import pandas as pd

df_encoded = pd.get_dummies(df, columns=['color', 'size'])

Lofer.tech: Encode post types (Reel/Story) for engagement models.

Scaling and Normalization

Equalize feature powerhouses - don't let salary dwarf age.

-

Standardization: Mean 0, std 1. Good for most (

StandardScaler). -

Min-Max Scaling: 0-1 range. Neural nets love it (

MinMaxScaler). -

When?: Distance-based algos (KNN, SVM). Trees? Skip.

Code:

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

Intuition: Tall guy vs. rich guy in a race - scale the track.

Handling Missing Values

No data left behind.

-

Drop: If <5% missing (

df.dropna()). -

Impute: Mean/median (

df.fillna(df.mean())), or KNN imputer for smarts. -

Advanced: Predict missings with models.

Pro: Check df.isnull().sum() first. Titanic age? Median fill works wonders.

Feature Selection Strategies

Ditch the noise - fewer, better features.

-

Filter: Correlation, chi-square - pick high-impact solo (

df.corr()). -

Wrapper: Stepwise - add/remove, test model performance.

-

Embedded: Lasso regression shrinks weak ones to zero.

-

Code:

from sklearn.feature_selection import SelectKBest, f_classif selector = SelectKBest(f_classif, k=5) X_selected = selector.fit_transform(X, y)Rule: Start with 10-20 features; aim for top 5-10. Boosts speed + accuracy.

Feature engineering's where pros separate - your models will crush now. Lofer.tech share your first engineered project!

Step 7 - Introduction to Deep Learning

Ready to level up? Deep Learning (DL) is ML with brainpower - handles images, speech like a human. My first neural net classified dog pics; hooked forever. If basics clicked, DL's next. Lofer.tech, build image filters; Neody IT, advanced analytics. 4-6 weeks: Start small, scale epic. Needs GPU? Colab's free.

Neural Networks Explained Simply

Picture a brain: Neurons connected in layers.

-

Input Layer: Raw data (pixels, words).

-

Hidden Layers: Process patterns (edges → shapes → "cat").

-

Output Layer: Prediction (90% cat).

Nodes chat: Input * weight + bias → output. Stack layers = "deep."

Analogy: Assembly line - raw ore → metal → car.

Activation Functions

"Fire or nah?" Adds non-linearity so nets learn curves.

-

ReLU: Max(0, x) - fast, kills "dying" neurons.

-

Sigmoid: S-curves for 0-1 probs (old-school).

-

Tanh: -1 to 1, zero-centered.

Code glimpse:

import tensorflow as tf

model = tf.keras.Sequential([

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(1, activation='sigmoid')

])

Loss Functions

Error score to minimize - like gym reps for accuracy.

-

Regression: MSE (squared differences).

-

Classification: Binary Cross-Entropy (prob mismatch penalty).

-

Goal: Backprop tweaks weights to shrink loss.

Intuition: High loss = bad predictions; train till low.

Backpropagation Basics

Magic optimizer: Learn from mistakes.

-

Forward pass: Predict.

-

Calculate loss.

-

Backward pass: Nudge weights opposite gradient (chain rule downhill).

-

Repeat: Epochs till convergence.

No calc needed - frameworks auto-do it. Visualize: Ball rolling to valley bottom.

Introduction to TensorFlow / PyTorch

Fab two: Google's TensorFlow (production-ready), Meta's PyTorch (research-flexible).

-

TensorFlow/Keras: Easy API.

model.compile(optimizer='adam', loss='mse');model.fit(X, y, epochs=50). -

PyTorch: Dynamic, Pythonic. Tensors like NumPy + gradients.

-

Starter Colab: MNIST digits - 95% accuracy in 10 lines.

Lofer.tech: PyTorch for quick Insta image classifiers. Neody IT: TensorFlow for scale.

# TensorFlow hello world

model.fit(X_train, y_train, epochs=10)

loss = model.evaluate(X_test, y_test)

DL's intimidating at first - nail MNIST, confidence soars!

Step 8 - Choosing Your AI Specialization

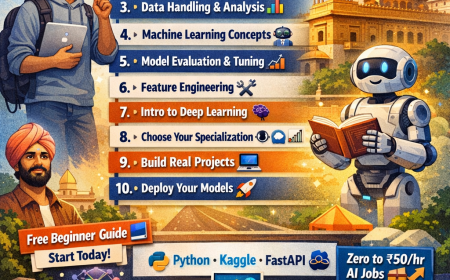

Roadmap crushed? Time to specialize - like picking your superhero power. I went NLP for chatbots; exploded my gigs. 2026's booming: CV up 40% jobs (Indeed), NLP everywhere post-ChatGPT. Lofer.tech, eye visuals/NLP for content; Neody IT, forecasting/recs for biz. Dive deep in one (2-3 months), blend later.

Computer Vision (CV)

Eyes for machines - see and understand images/videos.

-

What: Object detection, face recog, self-driving cams.

-

Tools: OpenCV, TensorFlow (YOLO, ResNet).

-

Projects: Build Instagram filter, medical X-ray analyzer.

-

Jobs: Autonomous cars (Tesla), security (85k openings '26).

-

Fit if: Love pics/videos. GPU handy.

Lofer.tech gold: Auto-caption Reels.

Natural Language Processing (NLP)

Machines that grok words - chat, translate, summarize.

-

What: Sentiment analysis, chatbots, translation.

-

Tools: Hugging Face Transformers, spaCy, GPT fine-tunes.

-

Projects: Build tweet bot, resume parser.

-

Jobs: Virtual assistants (Google), content AI (exploding).

-

Fit if: Wordsmith? Customer service fan.

Neody IT: Automate reports/emails.

Time Series & Forecasting

Predict future from past trends - stocks, weather, sales.

-

What: ARIMA, LSTM (RNNs for sequences).

-

Tools: Prophet, statsmodels, PyTorch Forecasting.

-

Projects: Stock predictor, website traffic forecaster.

-

Jobs: Finance (Goldman), supply chain (Amazon).

-

Fit if: Numbers over time excite you.

Lofer.tech: Predict viral post timing.

Recommendation Systems

"Your next binge" - personalized magic.

-

What: Collaborative filtering, content-based.

-

Tools: Surprise lib, TensorFlow Recommenders.

-

Projects: Netflix clone, Spotify playlist generator.

-

Jobs: E-comm (Amazon), streaming (Netflix/Spotify).

-

Fit if: Love matching people to stuff.

Quick compare:

| Specialization | Ease for Beginners | Hot Jobs '26 | Fun Project |

|---|---|---|---|

| Computer Vision | Medium (GPU) | High | Dog breed classifier |

| NLP | Easy | Very High | Chatbot |

| Time Series | Medium | High | Crypto predictor |

| Rec Systems | Easy | High | Movie recommender |

Pick by passion + market. Start a Kaggle comp in your fave - portfolio booster!

Step 9 - Building Real Projects (From Learning to Application)

Theory's cute, but projects = proof. My first portfolio nabbed freelance AI work. No more "I know Python" - show running apps! Lofer.tech, build content tools; Neody IT, biz solvers. Dedicate 1-2 months: One beginner, one intermediate. Deploy free on Streamlit/Hugging Face.

Beginner Project Ideas

Nail basics, 1-2 weeks each. Use Colab, public data.

-

Titanic Survival Predictor: Supervised classification. Clean data → Random Forest → 82% accuracy. (Kaggle dataset)

-

House Price Regression: Feature eng → Linear Regression. Predict from size/location.

-

Iris Flower Classifier: DL intro - neural net on classic data.

-

Sentiment Analyzer: NLP on tweets (VADER or simple model).

Code base: Scikit-learn + Streamlit app. Lofer.tech: Analyze comment vibes.

Intermediate Project Progression

Stack skills, 3-4 weeks. Add DL, deployment.

-

Image Classifier (Cats vs Dogs): CV with TensorFlow - transfer learning (pre-trained MobileNet).

-

Stock Price Forecaster: Time series LSTM on Yahoo Finance data.

-

Movie Recommender: Collaborative filtering on MovieLens.

-

Chatbot for Q&A: NLP fine-tune GPT-2 on custom data.

Progression: V1 basic model → V2 tuned/evaluated → V3 deployed API.

Neody IT: Customer churn dashboard.

Portfolio-Building Strategies

10 projects? Quality > quantity. Aim 3-5 stars.

-

GitHub Repos: Clean code, README with problem/solution/metrics/screenshots.

-

Jupyter → Apps: Notebook prototypes → interactive Streamlit/Heroku/Gradio.

-

Kaggle: Compete, rank - gold for resumes.

-

Blog It: Write "How I Built X" on Neody IT/Lofer.tech. Explains thinking.

-

Diversity: One per specialization.

Track: Accuracy, business impact (e.g., "Saved 20% analysis time").

How to Showcase Projects Professionally

From hobby to hireable.

-

GitHub README Pro: Badges (stars, forks), GIF demos, live links.

-

Personal Site: Carrd/WordPress - embed projects, tech stack.

-

LinkedIn/Lofer.tech: Post updates, "Built X - check it!" Tease results.

-

Video Demos: 1-min YouTube: Problem → Demo → Code peek.

-

Resume Bullet: "Developed CV model (95% acc) predicting plant diseases - deployed on Streamlit, 500+ users."

Freelance tip: Upwork gigs start at $50/hr with 3 solid projects.

Projects = your ticket. Build one this week - what's your pick?

Step 10 - Deploying Machine Learning Models

Models trained? Useless on your laptop. Deployment = sharing magic with the world. I demo'd a local predictor once - client's laptop died mid-pitch. Fail. Now? Live APIs everywhere. Lofer.tech, deploy content analyzers; Neody IT, client dashboards. 2-4 weeks: API-fy your portfolio stars.

Turning Models into APIs

Wrap model in a web endpoint - input data, get predictions.

-

Why?: Apps/websites call it (e.g., upload pic → "cat!" response).

-

Flow: Save model (

joblib.dump), load in app, POST/GET requests. -

Starter: Pickle your trained model:

import joblib; joblib.dump(model, 'model.pkl').

Real power: Anyone accesses via URL.

FastAPI / Flask Basics

FastAPI (modern, fast, auto-docs). Flask (simple, battle-tested).

-

FastAPI (2026 fave - async speed):

from fastapi import FastAPI import joblib app = FastAPI() model = joblib.load('model.pkl') @app.post('/predict') def predict(data: dict): prediction = model.predict([list(data.values())]) return {'prediction': prediction[0]}Run:

uvicorn main:app, hit /docs for UI.

Flask (quick):

from flask import Flask, request

app = Flask(__name__)

@app.route('/predict', methods=['POST'])

def predict():

data = request.json

return {'prediction': model.predict([list(data.values())])[0]}

Test: Postman or curl. Lofer.tech: API for Reel sentiment.

Model Deployment Concepts

From code to cloud.

-

Containers: Docker - package app + deps.

Dockerfilebundles Python/libs. -

Cloud Free Tiers:

-

Hugging Face Spaces (ML-focused).

-

Vercel/Render (FastAPI one-click).

-

Heroku (classic, dynos free).

-

AWS SageMaker/Google Cloud Run (scale later).

-

-

Steps: Git push → auto-deploy. Monitor with logs.

Example: Streamlit for dashboards (streamlit run app.py → share.link).

Introduction to MLOps

Ops for ML - automate train/deploy/monitor.

-

CI/CD: GitHub Actions - test/code/deploy on push.

-

Versioning: MLflow/DVC for models/data.

-

Monitoring: Track drift (data changes break model?). Tools: Weights & Biases.

-

2026 Tip: Serverless (no servers!) via AWS Lambda.

Neody IT: MLOps = reliable biz AI.

Deploy your Titanic model today - live link in bio!

Tools & Technology Stack for Beginners

Roadmap down, now gear up! Tools make or break flow. My stack evolved from trial-error; here's 2026's beginner gold - free-first, scalable. Lofer.tech mobile warriors: Colab-heavy. Neody IT: Full local/cloud. Bookmark this cheat sheet.

Python Ecosystem

Core language + must-haves (pip install all).

-

Essentials: Jupyter/Colab (experiment), VS Code/PyCharm (edit), Git (version control).

-

Libs Recap:

Lib Use Why Beginners Love NumPy/Pandas Data crunch Excel → pro Matplotlib/Seaborn/Plotly Viz Instant insights Scikit-learn Classic ML 80% projects TensorFlow/PyTorch DL Future-proof

Pro tip: conda environments avoid conflicts.

ML Frameworks

Pick 1-2; don't hoard.

-

Scikit-learn: ML bread-and-butter. Regression to clustering.

-

TensorFlow/Keras: Stable DL, production (Google backing).

-

PyTorch: Flexible research (Meta fave, dynamic graphs).

-

Hugging Face: NLP/CV plug-play models.

pipeline('sentiment-analysis'). -

2026 Newbie Pick: Keras for simplicity, HF for pre-trains.

Development Tools

Speed up 10x.

-

IDEs:

-

VS Code + extensions (Python, Jupyter, GitHub Copilot).

-

Cursor (AI-powered code completion - game-changer '26).

-

JupyterLab (notebooks forever).

-

-

Cloud Notebooks: Google Colab (free GPU), Kaggle (competitions).

-

Versioning: GitHub (free repos), DVC (data/models).

-

Debug: pdb or VS Code debugger.

Lofer.tech: Cursor for quick scripts on phone-linked laptop.

Cloud Basics

Deploy without servers.

| Platform | Free Tier | Best For | Beginner Ease |

|---|---|---|---|

| Google Colab | GPU/TPU | Training | Easiest |

| Hugging Face Spaces | Models/apps | NLP/CV | ML-focused |

| Vercel/Render | APIs | FastAPI | One-click |

| AWS/GCP Free | Everything | Scale | Learning curve |

Start: Colab → HF deploy. Budget? $5-10/mo scales fine.

My Stack '26: Colab + VS Code + FastAPI + HF + GitHub. Yours? Tweak and conquer!

Suggested Learning Timeline (Beginner to Intermediate)

Zero to job-ready in 6 months? Doable - I went from newbie to first client in 7. Consistency > cramming. This timeline assumes 10-15 hrs/week (fits full-time jobs). Lofer.tech, weave in content creation; Neody IT, align with certs. Adjust for life - progress > perfection.

Month-by-Month Progression

Build like Lego: Foundations first, towers later.

-

Month 1: Foundations

Python + Math (Steps 1-2). Goal: Code tip calculator, grasp vectors. Resources: Codecademy Python, Khan Academy linear algebra. -

Month 2: Data Mastery

Data handling (Step 3). Goal: Clean Titanic dataset, build histograms. Pandas 100. -

Month 3: Core ML

Models + Eval (Steps 4-5). Goal: 85% Titanic accuracy. Kaggle submit! -

Month 4: Pro Techniques

Features + DL Intro (Steps 6-7). Goal: Tune model + MNIST classifier. -

Month 5: Specialize & Build

Pick path + Projects (Steps 8-9). Goal: 2 deployed apps (e.g., recommender). -

Month 6: Deploy & Polish

APIs/Tools/Timeline review (Steps 10+). Goal: Portfolio site, LinkedIn showcase, freelance pitch.

Milestones: End Month 3 = first portfolio project. Track in Notion.

Realistic Expectations

No overnight unicorns - steady wins.

-

Wins: Month 2 feels slow; Month 4 clicks. 80% models beat baselines? Pro.

-

Stumbles: Bugs normal (Stack Overflow saves). First DL flops - expected.

-

Outcomes:

Time Skill Level Opportunity 3 Mo Solid basics Internships 6 Mo Intermediate Freelance/Junior roles ($50k+) 12 Mo Advanced AI Engineer ($100k+)

India 2026: 2L+ entry jobs (TCS, Infosys AI wings).

Learning Consistency Tips

Sustain the fire.

-

Daily Habit: 30-60 mins code > binge. LeetCode easy + ML daily.

-

Pomodoro: 25-min sprints, 5-min breaks.

-

Accountability: Lofer.tech posts ("Day 15: Beat Titanic!"), Discord study buds.

-

Resources Mix: 60% practice, 20% videos (Sentdex, StatQuest), 20% books (Hands-On ML).

-

Avoid Burnout: Weekly off, celebrate (pizza after project). Track streaks app.

-

Neody IT Hack: Weekly review - what stuck? Pivot fast.

Print this, pin it. 6 months from now? AI pro. Start today!

Final Thoughts: Becoming an AI Builder, Not Just a Learner

You've got the full roadmap - now the real magic: Shift from consumer to creator. I started as a tutorial zombie; today, I build AI that pays bills. You're next. Neody IT readers, this is your launchpad; Lofer.tech fam, turn Reels into real skills. AI's not a job - it's your superpower in 2026.

Mindset Shift

Ditch "I'll learn later." Builders think:

-

Problem-first: Spot pains (e.g., "Manual Insta analytics suck") → build solutions.

-

Fail fast: Bugs? Debug wins. 90% models suck first - iterate.

-

Ship relentlessly: Deploy weekly. Feedback > perfection.

-

Community in: Share on Lofer.tech, Reddit r/MachineLearning, Discord. Collab accelerates.

You're not studying - you're engineering the future.

Continuous Learning

AI evolves monthly - stay sharp, not stale.

-

Weekly: 1 new paper (arXiv Sanity), Kaggle comp.

-

Monthly: Specialization deep-dive (e.g., Llama 3 fine-tuning).

-

Yearly: Certs (Google AI, AWS ML), conferences (NeurIPS streams).

-

2026 Trends: Multimodal AI (text+image), Edge AI (phone models), Ethical AI.

Resources: Fast.ai courses, "Hands-On ML" book, Towards Data Science.

Future Roadmap

6 months in? Level up:

-

Short-term: Freelance (Upwork AI gigs), open-source contribs.

-

Mid: Junior role/specialist (India: 15-25LPA startups).

-

Long: Lead teams, launch AI startup. Multimodal agents by 2027.

Neody IT challenge: Build + deploy one project this month. Tag Lofer.tech - I'll hype it!

You're not just learning AI - you're building tomorrow. Start small, stay curious, ship big. What's your first project? DM on Lofer.tech. Let's go!

What's Your Reaction?

Like

3

Like

3

Dislike

0

Dislike

0

Love

3

Love

3

Funny

1

Funny

1

Angry

0

Angry

0

Sad

0

Sad

0

Wow

2

Wow

2